Linked Data for the Uninitiated (Part 1)

This two-part post is my follow-up to LAWDI 2012, officially known as the first Linked Ancient World Data Institute. It brought together a multi-disciplinary group of digital scholars at NYU’s Institute for the Study of the Ancient World (ISAW) whose interests incorporate the Ancient Medierranean and Near East. This essay is cross-posted on the GC Digital Fellows blog.*

In preparation for LAWDI 2012, I wrote a post called “Linked Data: A Theory,” pondering the concepts behind Linked Data, but it was clear to me from the beginning that I needed a more sturdy vocabulary and concrete skills in order to put these ideas into practice. This essay explores how Linked Data can be useful to digital scholars with any level of technical experience and, ultimately, why it’s worth the trouble to tackle a new skill set while building a digital project.

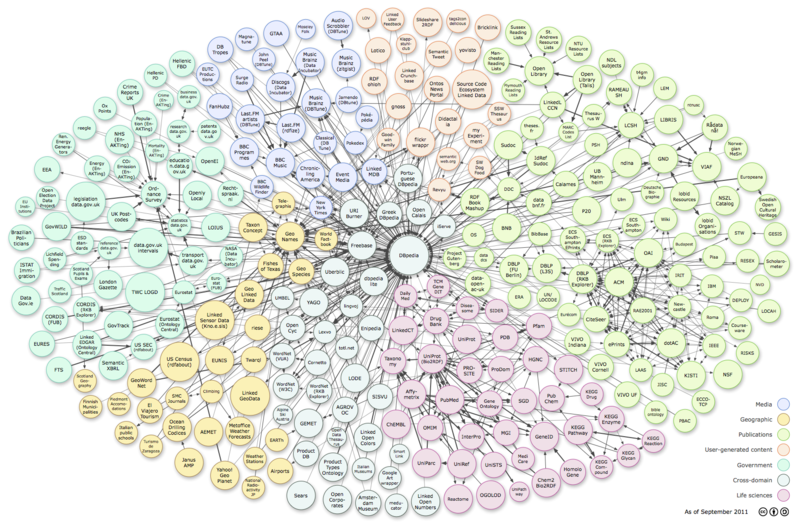

Linked Data is a philosophy applied to web development. It incorporates best practices through links (that are both human- and machine-readable) to build connections between projects and data sets. The most effective scholarship acts as a springboard for other researchers who cite the work and build on its ideas. To maintain its relevancy, research must be published and shared. The same goes for data collected in support of that scholarship. Linked Data allows institutions and individuals to share resources in order to make data available to many users, all remixing or reinterpreting it to produce new scholarship.

Linked Data is often incorporated into conversations about Open Access, a crucial movement intended to counteract academia’s traditional exclusionary practices by making scholarship freely available to the public. It is also frequently associated with the Open Source movement, referring to projects in which the source code is freely available so that another developer can use that project as the basis for another, often tweaking or adapting it in new ways in a process called forking. This code is often deposited on sites like GitHub for sharing.

The inherent collaborative nature of Linked Data underscores the fact that “links” and “networks” are most useful when they refer to people as well as data. LAWDI has been particularly productive because it brings together people and organizations whose data sets have a good chance of being useful to one another.

LAWDI reading assignments provide a good overview of Linked Data concepts for readers who are familiar with developing or overseeing digital projects. The World Wide Web Consortium (W3C) is a network of organizations led by Tim Berners-Lee (inventor of the world wide web), that develops and recommends standards for best practice in building the Semantic (linked) Web. The W3C website is considered by many to be the gold standard for web-related definitions and explanations. The information below is intended to precede those readings with a more general overview of how those projects are constructed.

Open Data

Linked Data connections are built with Open Data that has been made freely available to reuse or remix. For instance, Europeana and the Digital Public Library of America are huge repositories of data that have made their content available to everyone. (The opposite of Linked Data, by the way, is a “data silo,” a repository that isn’t linked or shared and is unavailable except to those with exclusive access).

Making data open is the first step toward creating Linked Data. It’s essential, of course, to determine rights information before publishing or sharing data. Archaeologists usually have permission to collect and publish their own findings; bibliographies can generally be shared; museums or archives can choose to share their own collections. Work that is under copyright probably isn’t something that should be shared. Adopting a Creative Commons License is one way to signal that data is available to others who may want to use it. In addition to data being available, it must be structured in such a way that others can use it, preferably at the initial stage of data entry or digitization.

How the Web Operates

At the front end of a website, when the user types a URL (Uniform Resource Locator) into the browser’s address bar, a website is displayed in the browser window. It does this by calling up the website’s data from a server and translating it into the visual elements displayed on the page.

To fully integrate Linked Data into a project, it is necessary to understand how a digital project is constructed from the ground up. In a nutshell, URIs identify things; RDF describes those things; RDF works within a framework called XML; XML works with HTML; HTML sends information to your browser. In more complex terms, each of these elements operates at the back end of the website to form a series of relationships.

The building blocks of the Semantic Web are URIs (Uniform Resource Identifiers). These are the names of things described on a website. A URI looks like a string of characters that expresses the thing’s filename and/or path to the directory of the file.

A URI always begins with a scheme name followed by a colon and then the remainder of the URI. The scheme name identifies where the data is permanently stored. For instance, a scheme name that begins with “http:” is a web resource, and one that starts with “ftp:” is from an FTP site. Ideally, these URIs should be created from scratch, and not automatically generated by a Content Management System.

A “Cool URI” is an identifier that never changes–the domain name of the website is stable, and the data isn’t moved around or erased or altered. This is important because if someone else links to the data, they need to be certain that the link will remain useful in perpetuity. A “clean URI” clearly describes the item as simply as possible, without superfluous characters or confusing symbols. The Digital Classicist website lists Very Clean URIs with no “cruft,” a term that includes “.cgi”,”.php”,”.asp”,”?”,”&”,”=” or similar characters.

URIs are the basis of a data model called RDF (Resource Description Framework). In an RDF framework, data is modeled into serializations (such as RDF triples expressing three ideas) in such a way that it is exposed to machine-readers as Linked Data. This data is intended to express relationships between people and/or things, using a controlled vocabulary for consistency among various projects and institutions.

Here’s an example from W3C’s RDF primer: An object whose URI is http://www.example.org/index.html has a creator named John Smith.

The RDF expression of this sentence would structure the data into three ideas:

a subject http://www.example.org/index.html

a predicate http://purl.org/dc/elements/1.1/creator

and an object http://www.example.org/staffid/85740

Note that each of these ideas can be expressed with a URI.

RDF is a framework written in XML** (Extensible Markup Language). Markup languages are systems for annotating data that convey information about an item or instruct the software or web browser on what to display. All RDF triples written in XML are designed to describe data (by marking it up with machine-readable tags) to work in tandem with HTML (HyperText Markup Language), which displays data on the web. Technically speaking, a webpage is an HTML document.

By thoughtfully crafting clean URIs and incorporating them into RDF, a developer can facilitate Linked Data according to Tim Berners-Lee’s four “expectations of behavior” that are nicknamed the Four Rules for Linked Data. Quoted from his site, they are as follows:

- Use URIs as names for things

- Use HTTP URIs so that people can look up those names.

- When someone looks up a URI, provide useful information, using the standards (RDF*, SPARQL)

- Include links to other URIs. so that they can discover more things.

Berners-Lee calls these “simple.” Perhaps. But they’re not common sense. This leads to the question of whether creating Linked Data is advisable or even possible for a non-developer, an institution with limited resources, or a solo researcher.

In Part 2, I’ll discuss some options for creating Linked Data on a small scale and ways that existing Content Management Systems can be tweaked to be more Linked Data-friendly.

*This post represents a year of stumbling through data in ongoing efforts to become more digitally literate, an adventure supported by a GC Digital Fellowship and participation in the New Media Lab at the CUNY Graduate Center. My heartfelt thanks go out to all of the LAWDI 2012 presenters and participants, particularly Sebastian Heath and Chuck Jones of ISAW who have continued to help me aim for a LAWDI-friendly dissertation, and to Andrew Reinhard of ASCSA for keeping up Lawdite momentum. I’m also grateful to Aaron Knoll, former project advisor in the New Media Lab and overall good egg, for helping with an early draft of this post. Stephen Klein, Digital Services Librarian at the CUNY Graduate Center, has provided links and advice about sustainability. Conversations with Jared Simard about Mapping Mythology and Omeka are always helpful. Matt Rossi is an excellent writing consultant. Flaws and omissions are mine, of course, but it does, indeed, take a village to link data.

**UPDATE 9/13: In the original post, I stated that “RDF works within a framework called XML,” which could be considered an outdated view because linked data can be produced in a variety of formats. The RDF/XML model explained in this post is one example, but not the only option. For instance, RDF can be also written in JSON (JavaScript Object Notation). For a more technical account of RDF use and best practice, see W3C’s RDF primer.

Thanks to Kingsley Uyi Idehen (@kidehen), Hugh Cayless (@hcayless), and Sebastian Heath (@sebhth) for a lively twitter exchange on this topic when Kingsley pointed out that “Linked Data is format agnostic. Basically semantics, syntax, and encoding notations are loosely coupled. No XML or JSON specificity,” (tweet: 6 June from @kidehen).